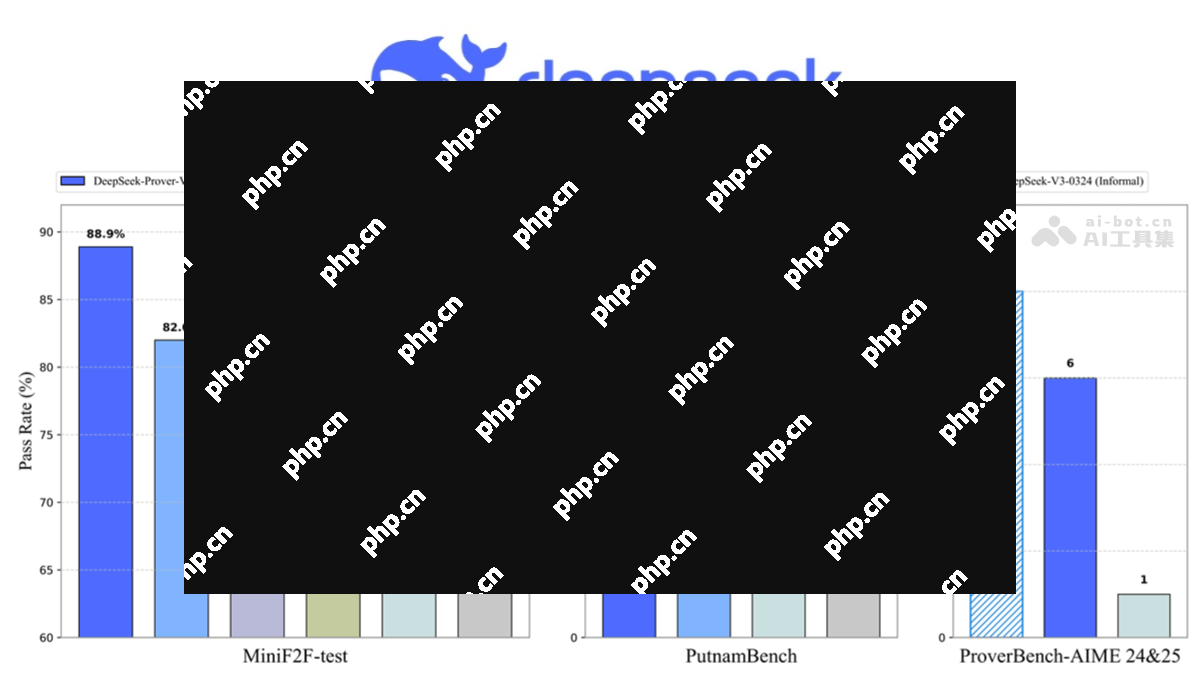

deepseek-prover-v2 is developed by the Deep Search teamdeepseekA hyperscale language model developed focused on mathematical reasoning. It includes two versions:deepseek-prover-v2-671b anddeepseek-Prover-v2-7b, with 671 billion and 7 billion parameters respectively, is an upgraded version of Prover-V1.5. The model adopts a hybrid expert system (MOE) architecture, supports ultra-long contexts and multi-precision computing, and can transform natural language problems into formal proof code. Advanced multi-head latent attention (MLA) architecture reduces inference by compressing key-value cache (KV cache).Memory Usageand calculating overhead. The pipeline generates data through recursive theorem proofs, and adopts a three-stage training paradigm that includes pre-training, mathematical special training, and human feedback reinforcement learning fine-tuning. In terms of performance,deepseek-Prover-v2 excels on mathematical reasoning datasets, with a pass rate of 88.9% for formal theorem proofs. was releaseddeepseek-Proverbench dataset to evaluate model performance. The model is open source and can be used on the Hugging Face platform, suitable for formal theorem proofing, automatic theorem verification, logical reasoning training and other scenarios, bringing new breakthroughs in the field of mathematical reasoning.

Key features of DeepSeek-Prover-V2 include:

Key features of DeepSeek-Prover-V2 include:

- Math problem solving: Capable of handling a wide range of problems, from basic algebra to advanced mathematics, and excelling in automatically proving theorems and performing complex calculations.

- Formal reasoning training: Formal reasoning training based on the Lean 4 framework, combined with reinforcement learning and large-scale synthetic data, significantly improves the ability of automated proofs.

- Train and deploy efficiently: Use a more efficient safetensors file format and support multiple calculation precisions such as BF16, FP8, F32, etc., which is convenient for faster and less resource-saving model training and deployment.

- Extra-long contextual processing: Supports a context window of up to 163,840 tokens, capable of handling mathematical proof tasks for large-scale and long logical chains.

- Dual-mode problem solving: Provides fast mode (directly generates code answers) and logic mode (step-by-step disassembly and reasoning process) to meet the needs of different scenarios.

- Knowledge distillation and optimization: Improve the performance of small models through knowledge distillation technology, and achieve high-performance inference on resource-constrained devices.

The technical principles of DeepSeek-Prover-V2 include:

- Multi-head latent attention (MLA) architecture: The model adopts an advanced multi-head latent attention architecture, which effectively reduces the memory occupation and computational overhead in the inference process by compressing the key-value cache, so that the model can still run efficiently in a resource-limited environment.

- Hybrid Expert (MoE) architecture: The model is based on a hybrid expert architecture and uses the Lean 4 framework for formal reasoning training. By combining reinforcement learning with large-scale synthetic data, automated proof capabilities are enhanced.

- File format and calculation accuracy😀 eepSeek-Prover-V2-671B uses a more efficient safetensors file format and supports various calculation precisions such as BF16, FP8, and F32, allowing the model to be trained and deployed faster and more resource-saving.

- Strengthen the learning and training paradigm😀 eepSeek-Prover-V2 adopts a three-stage training paradigm: pre-training, math-specific training, and human feedback reinforcement learning (RLHF) fine-tuning. In the reinforcement learning phase, the model uses the GRPO algorithm to optimize the strategy by sampling a set of candidate proofs for each theorem and optimizing the strategy based on their relative rewards. The model gradually increases the difficulty of the training task through course learning, guiding the model to learn more complex proofs.

- Formal prover integration😀 eepSeek-Prover-V2 innovatively integrates a formal prover that can transform natural language problems into code representations of proof assistance systems such as Coq/Lean.

The project addresses of DeepSeek-Prover-V2 include:

- Github repository:https://www.php.cn/link/1ae6ef6d58b4b5c4120321a9786c100d

-

HuggingFace Model Library:

- DeepSeek-Prover-V2-671B:https://www.php.cn/link/ea4617226119a78dda076158cbaef41d

- DeepSeek-Prover-V2-7B:https://www.php.cn/link/8108e7580d35a6df529b4cd78137c5aa

Application scenarios of DeepSeek-Prover-V2 include:

- Education: In the field of education, DeepSeek-Prover-V2 can serve as a powerful teaching aidtoolto help students and teachers solve complex math problems.

- scientific research: In scientific research, DeepSeek-Prover-V2 can assist researchers in complex mathematical modeling and theoretical verification.

- Design: In the field of engineering design, DeepSeek-Prover-V2 can be applied to optimize design and simulation testing.

- Financial analysis: In the financial sector, DeepSeek-Prover-V2 can be used for risk assessment and investment strategy analysis.

- Software development: During software development, DeepSeek-Prover-V2 can assist developers in algorithm design and performance optimization.

The above is the detailed content of DeepSeek-Prover-V2 – the open source mathematical reasoning model launched by DeepSeek, for more information, please pay attention to other related articles on the PHP Chinese website!